Welcome to the Azure 70-533 Exam series, this is the first post of the objective 3 section called Design and Implement a Storage Strategy and this post is titled Implement Azure Storage Blobs and Azure Files.

As you can see from the section title, this section completely deals with the Azure Storage core concepts and not like the ones that we have been seeing earlier which were in the context of an Azure VM.

In this post of Objective 3.1 - Implement Azure Storage Blobs and Azure Files, the sub-objectives that we will be looking at are:

- Identify appropriate blob type for specific storage requirements

- Read data

- Change data

- Set metadata on a container

- Store data using block and page blobs

- Stream data using blobs

- Access blobs securely

- Implement async blob copy

- Configure Content Delivery Network (CDN)

- Design blob hierarchies

- Configure custom domains

- Scale blob storage

- Manage SMB File storage

- Implement Azure StorSimple

This is probably going to be the largest post in this series since we have a lot of sub-objectives to cover.

Identify appropriate blob type for specific storage requirements

A blob is any single entity comprised of binary data, properties, and metadata. The word ‘Blob’ expands to Binary Large Object.

Below are some of the things that you need to remember before creating blobs.

- A blob name can contain any combination of characters.

- A blob name must be at least one character long and cannot be more than 1,024 characters long.

- Blob names are can only be lowercase letters and numbers.

As of today, Microsoft Azure offers three different types of blob services which are, block blobs, append blobs, and page blobs.

Let's define them so that we can remember in future.

- Block blobs are for your discrete storage objects like jpg's, log files, etc. that you'd typically view as a file in your local OS. Max. size 4.77TB.

- Page blobs are for random read/write storage, such as VHD's (page blobs are what's used for Azure Virtual Machine disks). Max. size 8TB. Supported by both regular and Premium Storage.

- Append blobs are optimized append blocks that help in making the operations efficient. Eg: Log files.

Read Data

As discussed in our earlier articles, there are various ways that one can read the data associated with a storage account.

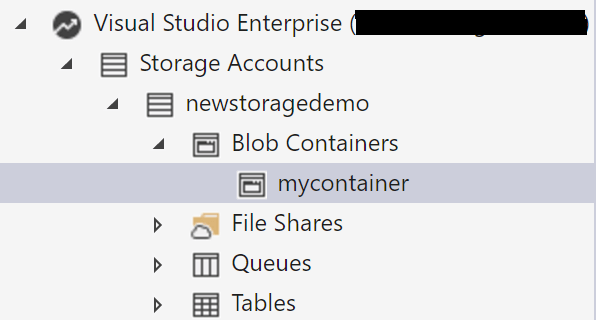

For this example, I will be creating a new storage account called newstoragedemo.

Within a storage account, there are various types of storage services available, which are:

- Blob storage: Unstructured file data

- Table storage: NoSQL semi-structured data

- Queue storage: Pub/sub messaging data

- File storage: SMB file shares

One of the ways that you could read the data that resides in a storage account is using Microsoft Storage Account Explorer which is a free utility.

We need to create a container first to store any type of blob. In my case, I have a container called mycontainer.

Change Data

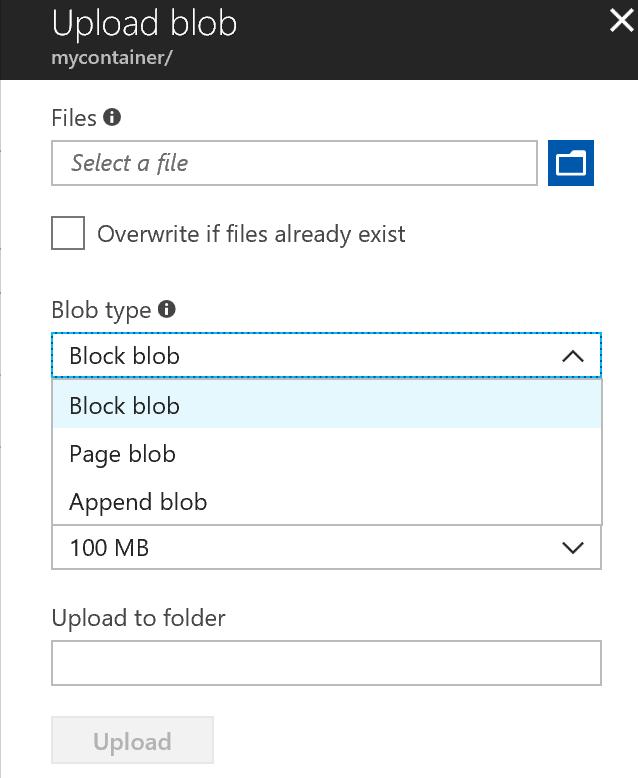

In this section, we will try to add a file to an existing container and see what options are available to us. We could perform this step either using the Azure Portal or use Storage Explorer.

As you can see below, once you click on the Upload button, it will provide you an option to select a file. If you click on the Advanced section, it will let you specify the blob type.

Note: Once a blob type is created, it cannot be changed.

Set Metadata on a container

Metadata for a container or blob resource is stored as name-value pairs associated with the resource.

Typically you would have to use Azure REST API to retrieve the metadata information on the container.

Here is a very good article which talks about accessing properties of Azure blobs with PowerShell.

Store data using block and page blobs

As mentioned above, there are three types of blobs available and they are:

- Block blobs are for your discrete storage objects like jpg's, etc. that you'd typically view as a file in your local OS. Max. size 4.77TB.

- Page blobs are for random read/write storage, such as VHD's (page blobs are what's used for Azure Virtual Machine disks). Max. size 8TB. Supported by both regular and Premium Storage.

- Append blobs are optimized append blocks that help in making the operations efficient. Eg: Log files.

Block blobs can be used store any file type which is typically unstructured data.

Page blobs can be used when there is random read/write IOPS.

Stream data using Blobs

For us to follow along with this topic, we first need to understand why would you want to stream data. Below is a nice marketing video from Microsoft which explains this.

And now that you have understood what Azure Stream Analytics Service does, this Microsoft docs page which explains how to stream data from Azure Blob into Data Lake Store using Azure Stream Analytics

Access Blobs securely

There are three different access levels that can be applied to a container or the blobs.

- No public read access: The container and its blobs can be accessed only by the storage account owner. This is the default for all new containers.

- Public read access for blobs only: Blobs within the container can be read by anonymous request, but container data is not available. Anonymous clients cannot enumerate the blobs within the container.

- Full public read access: All container and blob data can be read by anonymous request. Clients can enumerate blobs within the container by anonymous request, but cannot enumerate containers within the storage account.

To access the blobs securely, you would want to select No public read access either at the container level and the blobs under it will have the same access level.

There is also an Azure storage security guide available which goes into much more detail about the various options available from the security perspective.

Implement Async Blob Copy

One of the easiest ways to copy data between storage accounts is using the Azure asynchronous server-side copy capability which is exposed through the Start-CopyAzureStorageBlob cmdlet.

The advantage of this method is that the data is sent using the Microsoft's network and not via your network.

Another interesting capability is that it takes into account only the used space if it is in case of Page Blobs where your VHDs might be large but the used space might be much lower, which in turn saves you money on egress traffic.

Below is a handy PowerShell script that you could use to perform asynchronous blob copy.

$SourceStorageAccount = "storageaccount1"

$SourceStorageKey = "yourKey1=="

$DestStorageAccount = "storageaccount2"

$DestStorageKey = "yourKey2=="

$SourceStorageContainer = 'vhds'

$DestStorageContainer = 'vhds'

$SourceStorageContext = New-AzureStorageContext –StorageAccountName $SourceStorageAccount -StorageAccountKey $SourceStorageKey

$DestStorageContext = New-AzureStorageContext –StorageAccountName $DestStorageAccount -StorageAccountKey $DestStorageKey

$Blobs = Get-AzureStorageBlob -Context $SourceStorageContext -Container $SourceStorageContainer

$BlobCpyAry = @() #Create array of objects

#Do the copy of everything

foreach ($Blob in $Blobs)

{

Write-Output "Moving $Blob.Name"

$BlobCopy = Start-CopyAzureStorageBlob -Context $SourceStorageContext -SrcContainer $SourceStorageContainer -SrcBlob $Blob.Name `

-DestContext $DestStorageContext -DestContainer $DestStorageContainer -DestBlob $Blob.Name

$BlobCpyAry += $BlobCopy

}

Just replace the variables with your use case and you are good to go.

You can check the output of the copy by using the below script.

foreach ($BlobCopy in $BlobCpyAry)

{

$CopyState = $BlobCopy | Get-AzureStorageBlobCopyState

$Message = $CopyState.Source.AbsolutePath + " " + $CopyState.Status + " {0:N2}%" -f (($CopyState.BytesCopied/$CopyState.TotalBytes)*100)

Write-Output $Message

}

Configure Content Delivery Network (CDN)

As you must be aware, one would typically use a Content Delivery Network (CDN) to make sure that the data is cached and available globally.

You can enable Azure Content Delivery Network (CDN) to cache content from Azure storage. It can cache blobs and static content of compute instances at physical nodes at each of the locations where the CDN service is available.

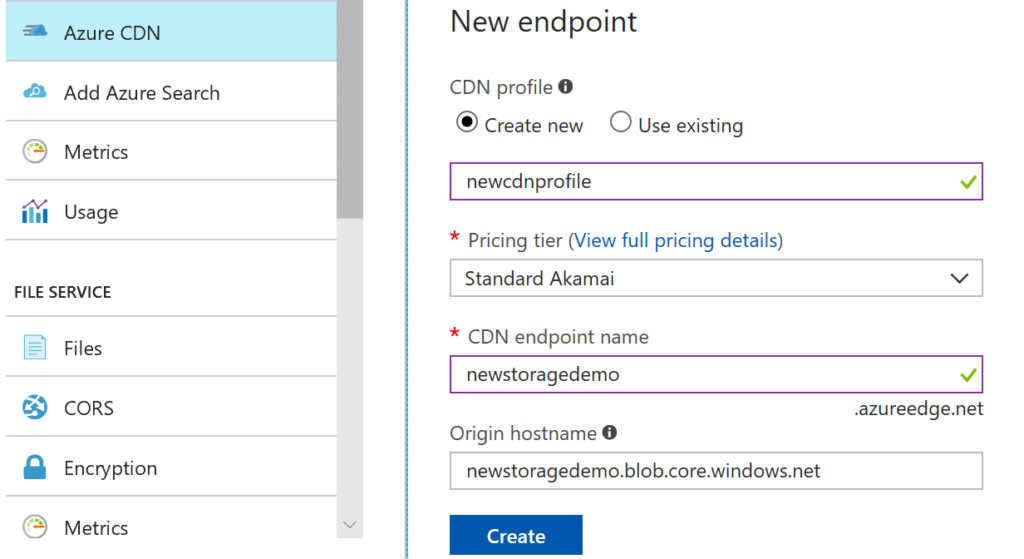

To enable Azure CDN for the storage account from the portal, perform the below.

Select a storage account from the dashboard, then select Azure CDN from the left pane. Create a new endpoint by entering the required information:

- CDN Profile: Create a new CDN profile or use an existing CDN profile.

- Pricing tier: Select a pricing tier only if you are creating a CDN profile.

- CDN endpoint name: Enter a CDN endpoint name.

Click Create to finish creating the Endpoint.

Click Create to finish creating the Endpoint.

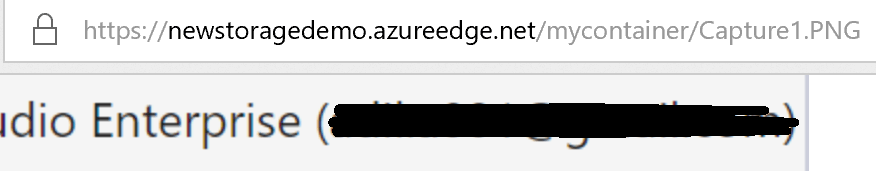

As an example, I have added a PNG file to my container as a block blob and able to access it publicly. Make sure you set the permissions correctly.

If you no longer want to cache an object in Azure CDN, you can perform one of the following steps:

- Make the container private instead of public.

- Disable or delete the CDN endpoint by using the Azure portal.

Design Blob Hierarchies

You must by now know that the below is the default hierarchy within Azure Storage.

Storage account » Container » Blob name

A storage virtual hierarchy is most often accomplishing by adding a prefix to the name of the blob itself. Let me show an example to make it easier to understand.

Storage Account » [blob.core.windows.net] » raw » pictures/picture1.png

Storage Account » [blob.core.windows.net] » raw » audio/audio1.pcm

In the above case, we have used / as the prefix. By doing this we are creating a virtual hierarchy.

NOTE: There is one exception to this rule. Azure Blob storage supports using a root container which serves as a default container for a storage account. When using a root container, a blob stored in the root container may be addressed without referencing the root container name.

Configure Custom Domains

You can configure a custom domain for accessing blob data in your Azure storage account. As explained earlier, the default endpoint for Blob storage is <storage-account-name>.blob.core.windows.net.

There are two ways that you can create custom domains for your Blob storage endpoint.

- Direct CNAME mapping.

- Intermediary mapping using asverify.

Below article explains both the steps in detail.

Configure a custom domain name for your Blob storage endpoint

Scale Blob Storage

When using Blob storage, you need to keep in mind as to what are the maximums that are available within a storage account and in turn the blob service.

Below table highlights the important maximums.

| Resource | Target |

|---|---|

| Max size of single blob container | 500 TiB |

| Max number of blocks in a block blob or append blob | 50,000 blocks |

| Max size of a block in a block blob | 100 MiB |

| Max size of a block blob | 50,000 X 100 MiB (approx. 4.75 TiB) |

| Max size of a block in an append blob | 4 MiB |

| Max size of an append blob | 50,000 x 4 MiB (approx. 195 GiB) |

| Max size of a page blob | 8 TiB |

| Max number of stored access policies per blob container | 5 |

Manage SMB File Storage

In this section, Microsoft wants you to know about the Azure File Service. Azure Files are simple, secure and fully managed cloud file shares.

I have already covered this topic in detail when dealing with Azure IaaS Virtual Machines and can be found here.

Implement Azure StorSimple

The Microsoft Azure StorSimple Virtual Array is an integrated storage solution that manages storage tasks between an on-premises virtual array running in a hypervisor and Microsoft Azure cloud storage.

Read this article here for a complete overview of the solution.

You can also download the Microsoft Azure StorSimple Getting Started Guides by clicking here.

I hope this has been informative and thank you for reading!