Welcome to the last of the Design and Implement a Storage Strategy of the Azure 70-533 series titled Objective 3.4 - Implement Storage Encryption. In our previous post, we discussed the diagnostics, monitoring, and analytics for an Azure Storage Account.

As the title of this post suggests, we will be dealing Azure Storage Encryption and it has two sub-objectives that we need to pay attention to, which are:

- Encrypt data as written to Azure Storage by using Azure Storage Service Encryption (SSE)

- Implement encrypted and role-based security for data managed by Azure Data Lake Store

Without further ado, let's jump on to the first sub-objective.

Encrypt data as written to Azure Storage by using Azure Storage Service Encryption (SSE)

Azure Storage Service Encryption (SSE) for Data at Rest helps you protect and safeguard your data to meet the organizational security and compliance requirements.

When this feature is enabled, Azure Storage automatically encrypts the prior to writing it to the underlying storage and decrypts it prior to retrieval.

Azure Storage Service Encryption (SSE) provides encryption at rest, handling encryption, decryption, and key management in a totally transparent manner to the users. All data is encrypted using 256-bit AES encryption.

As explained earlier, Azure Storage Service Encryption (SSE) encrypts the data prior to writing it to Azure Storage and can be used for all the four Storage Services at the time of writing this article.

It works for the following:

- Standard Storage: General-purpose storage accounts for Blobs, File, Table, and Queue storage and Blob storage accounts.

- Premium storage.

- It is enabled by default for Classic and ARM Storage accounts.

- For all redundancy levels (LRS, ZRS, GRS, RA-GRS).

- Available in all regions.

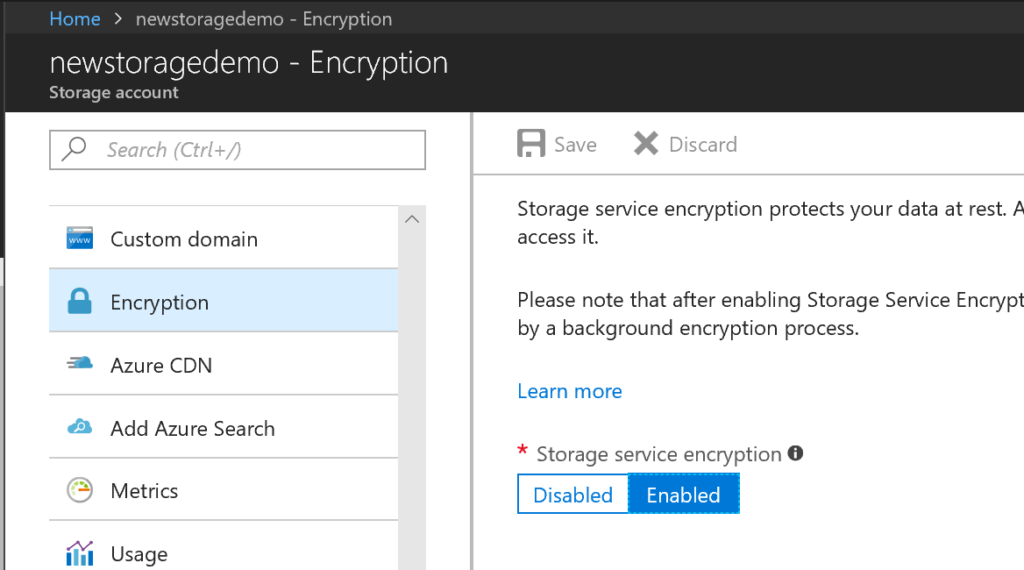

To view settings of Storage Service Encryption for a storage account, log into the Azure portal and select a storage account. On the Settings blade, look for the Blob Service section as shown in this screenshot and click Encryption.

Below is a handy workflow for Encryption and Decryption once it is enabled for a Storage Account.

- When the customer writes new data (PUT Blob, PUT Block, PUT Page, PUT File etc.) to Blob or File storage; every write is encrypted using 256-bit AES encryption.

- When the customer needs to access data (GET Blob, etc.), data is automatically decrypted before returning to the user.

- If encryption is disabled, new writes are no longer encrypted and existing encrypted data remains encrypted until rewritten by the user.

- While encryption is enabled, writes to Blob or File storage will be encrypted. The state of data does not change with the user toggling between enabling/disabling encryption for the storage account.

- All encryption keys are stored, encrypted, and managed by Microsoft.

For more information, refer to the FAQ section here.

Implement encrypted and role-based security for data managed by Azure Data Lake Store

Azure Data Lake Store is an enterprise-wide hyper-scale repository for big data analytics workloads. Azure Data Lake enables you to capture data of any size, type, and ingestion speed in one single place for operational and exploratory analytics.

Securing data in Azure Data Lake Store is a three-step approach.

- Start by creating security groups in Azure Active Directory (AAD). These security groups are used to implement role-based access control (RBAC) in the Azure portal.

- Assign the AAD security groups to the Azure Data Lake Store account. This controls access to the Data Lake Store account from the portal and management operations from the portal or APIs.

- Assign the AAD security groups as access control lists (ACLs) on the Data Lake Store file system.

Additionally, you can also set an IP address range for clients that can access the data in Data Lake Store.

Below is an excellent article that explains the steps and provides you with relevant screenshots as well.

Securing Data stored in Azure Data Lake Store

I hope this has been informative and thank you for reading!